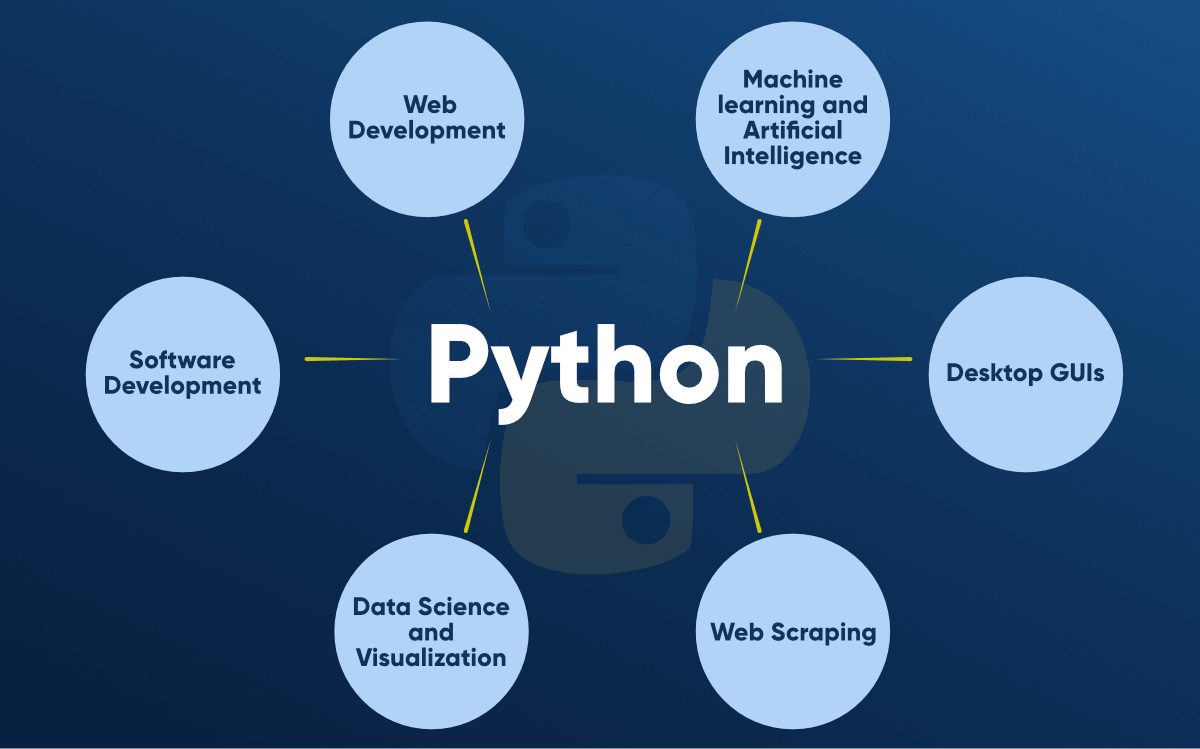

One of the well-liked unsupervised learning strategies is hierarchical clustering. The word hierarchy, which refers to arranging things in order of importance, is where the term hierarchical clustering originated. It identifies items of the dataset that share the same attributes being taken into account and clusters them. Ultimately, you are left with a single large cluster, primarily composed of groups of data points or groups of other groups. Clustering issues are approached in two different ways by hierarchical clustering. It is a method more focused on organizing data into dendrograms, which graphically illustrate the hierarchical link between the underlying groups. With a Python online certificate, you can find various job opportunities. This results in less usage of this approach than agglomerative clustering in clustering jobs. Below mentioned are the types and approaches of Hierarchical clustering in Python:

Types of Hierarchical Clustering in Python:

- Agglomerative cluster:

Begin with the cluster leaf in this clustering method and work until you reach the cluster root. Initially, this method assumes that every data point in the dataset represents a separate cluster. Each data point is regarded as a single-element cluster at first. You get fewer clusters at each iteration of the current iteration than the previous iteration because the two most similar clusters are joined at each step. This method is repeated until you achieve a single large cluster whose components are clusters with similar features. You can use a scatter plot to display the data clusters when all clustering is finished.

- Divisive cluster:

A top-down strategy involves divvying up clusters. It is an agglomerative clustering reversed in order. Since all data points are first thought to be homogeneous, clustering begins with a single large cluster of all the data points. The most heterogeneous group is then split into two distinct sets at each iteration of clustering, reducing their variation. The process keeps going until the ideal number of clusters is reached. However, the starting premise of Divisive clustering, that the data is homogeneous, has less validity than the premise of agglomerative clustering, that the data points are distinct.

Applications of Hierarchical Clustering

- Biology:

One of the most challenging problems in bioinformatics is how to cluster DNA sequences. To divide creatures into taxonomic categories, biologists can use hierarchical clustering to examine the genetic links among organisms. This helps to analyze and visualize the underlying relationships quickly.

- Image processing:

In image processing, hierarchical clustering can classify similar pixels or areas of an image according to their color, intensity, or other attributes. This can be helpful for further tasks, including object detection, image segmentation, and classification.

- Marketing:

Marketing professionals might utilize hierarchical clustering to create a hierarchy between various client categories based on their purchase behaviors for better marketing tactics and product recommendations. Customers who are low, medium, or high spenders may be given suggestions for various products in retail settings.

- Social network analysis:

When used effectively, social networks are a fantastic source of essential data. One can locate communities or groups using hierarchical clustering and comprehend their connections to one another and the overall network structure.

Advantages of Hierarchical clustering:

Detailed information about which observations are most comparable is one of the critical benefits of hierarchical clustering. In Python online course certification, you can learn more about Hierarchical clustering. Many other techniques, which often yield the cluster ID to which an observation belongs, offer a different degree of specificity. When you have a small amount of data in which you are very interested and wish to find words comparable to those observations, hierarchical clustering can be quite helpful.

Bottom line:

Hierarchical clustering is a wonderful method for dealing with small to medium datasets and identifying patterns despite its limits regarding massive datasets. Those mentioned above are the fundamental ideas behind Python’s implementation of the unsupervised learning method, hierarchical clustering. Consider looking into online courses to learn more about supervised and reinforcement learning algorithms and their use in the trading industry.